Expanse

Expanse is a generative music composition, meaning that the music is created in real-time by a system as it plays, as opposed to being composed beforehand like traditional music. This means that the music will never sound exactly the same when played, is always changing, and can go on forever as long as the system is generating it.

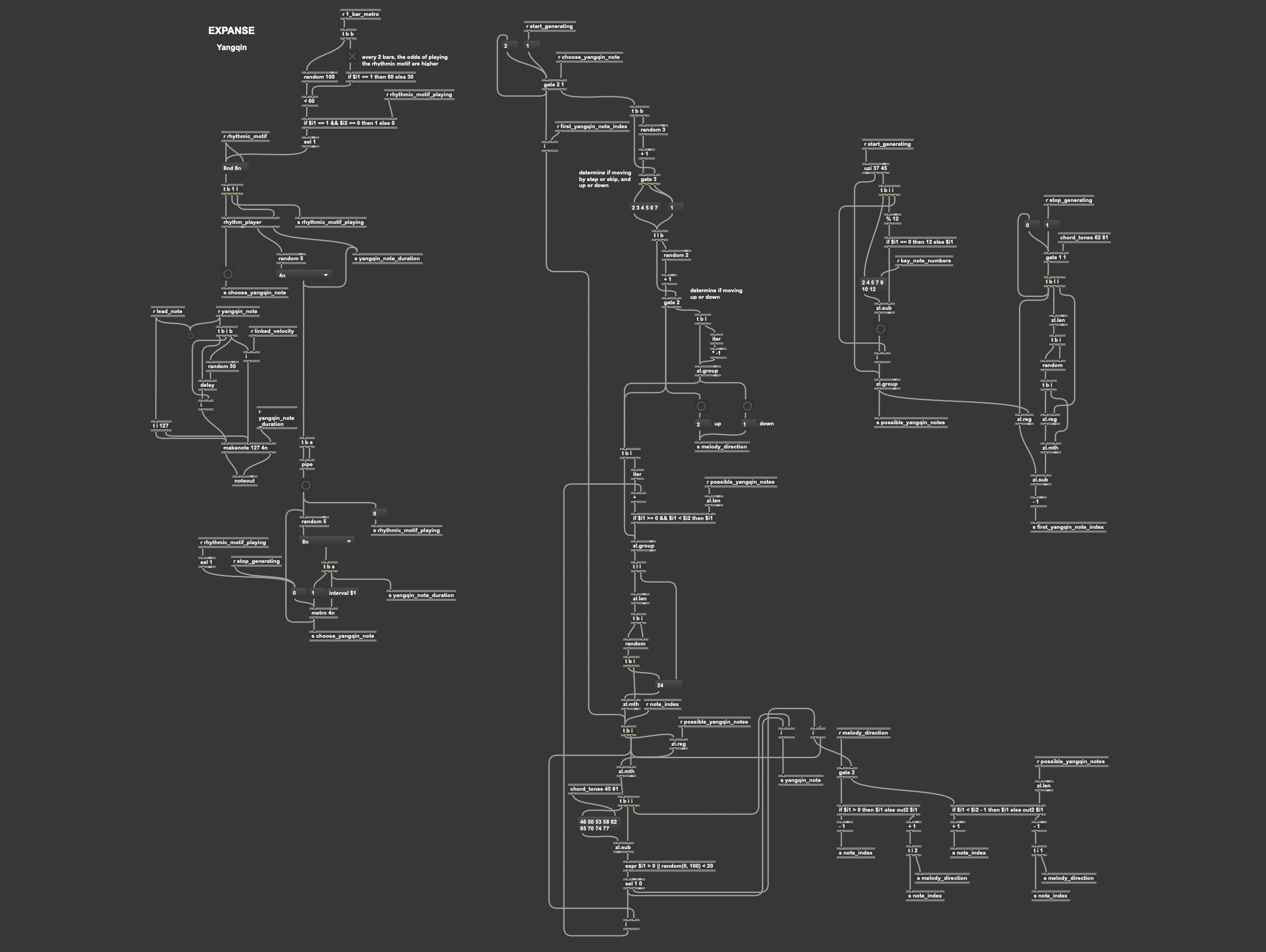

In this case, the “system” is a series of Max For Live patches, one for each instrument in the composition, each placed on a corresponding Ableton MIDI track. The instruments include a synth bass, a harmonically rich pad, a sample-based synth blending samples of a flute instrument with samples of a mallet instrument, another sample-based synth playing the yangqin (a Chinese hammered dulcimer), and synthetic airy keys. The Max patches tell their corresponding instrument which notes to play and when, via MIDI messages. The notes, rhythms, melody, and harmony are all generated in real-time by algorithms I designed in the Max patches. These algorithms also change the timbres of instruments over time, by modulating parameters such as distortion, attack time, and filter cutoff.

However, I did include some non-generative aspects in the piece. I play field recordings of rain, birds, and wind throughout, crossfading between textures at preset times. I also include two sections where percussion comes in — namely a gong and some metallic textures — at preset times playing preset patterns. The mix, such as levels and panning, was also created manually. Another key non-generative aspect of the piece in its current form is the way I model long-term structure by bringing instruments in and out of the composition at preset times using volume automation.

This structure (and the way the piece sounds overall) is meant resemble the listener getting lost in a vast natural environment such as an expansive mountain range or a lush rainforest — hence the name “Expanse”. The piece starts with just the field recordings, then gradually more instruments fade in, to resemble the listener getting more and more lost in this environment, then eventually the instruments drop out one by one, leaving only the field recordings again at the end, to resemble the listener finding their way. This means the piece’s duration is currently fixed, whereas generative music typically lasts indefinitely, however the underlying mechanisms for generating the harmony, melody, rhythm, and timbre changes can go on forever, if the volume automation is removed from the Ableton session.

You can view a recording of the piece playing within the Ableton session below, as well as an audio-only recording of a separate instance of the piece playing, to hear how it is different every time it plays. Read on below that if you are interested in how I went about designing the algorithms to generate the music.

Many other works of generative music are characteristically soft and ambient in nature, and easily ignorable. Recognizing this, I was influenced to create something that commanded a bit more attention. While it can still be considered ambient, particularly because it serves a soundtrack for an environment, the rhythms and timbres in “Expanse” often do not make for soft and ignorable background music. Contributing factors include occasional heavy distortion on the flute and mallet instrument, punchy attacks and fast rhythms on several instruments, and the powerful percussion.

I also wanted the piece to depart from most ambient and generative music by incorporating musical elements that sound more like traditional, manually composed music and less like something generated by an algorithm. I did so by first composing a short non-generative musical passage using these instruments, then designing my algorithms for generating the notes and rhythms such that they would produce a result similar to this pre-composed passage. From analyzing what made the passage sound good, I determined that some key components include having a rhythmic motif and incorporating call-and-response between instruments.

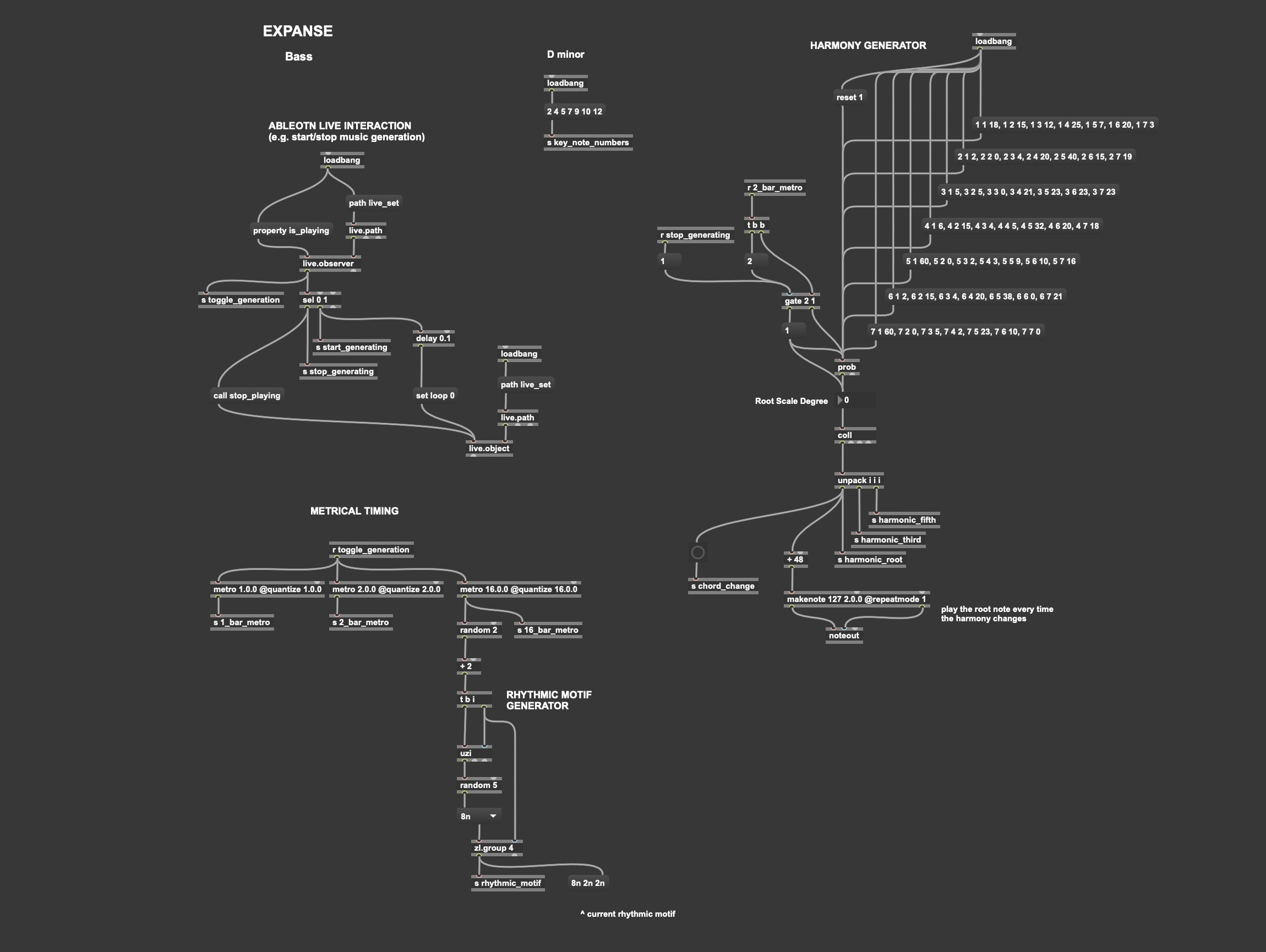

The patch on the bass serves as the conductor for the piece, by generating global elements such as harmony and the current rhythmic motif, which are then sent to the other instruments’ patches. The harmony generation uses a Markov chain, deciding every two measures which scale degree to transition to next, with different probability weightings depending on the current scale degree. These probabilities are based on a combination of functional harmony (e.g. predominant will most likely transition to dominant), as well as my own experimentation with which chord changes sound better or worse with these instruments. The bass holds the root note of each chord until the chord changes. Every 16 bars, the algorithm selects a new rhythmic motif by choosing 2-3 note durations from a set of five possibilities. The bass itself does not use this rhythm, but sends it to other instruments which play notes with these durations in the same order at different times. This also creates a call-and-response effect between instruments.

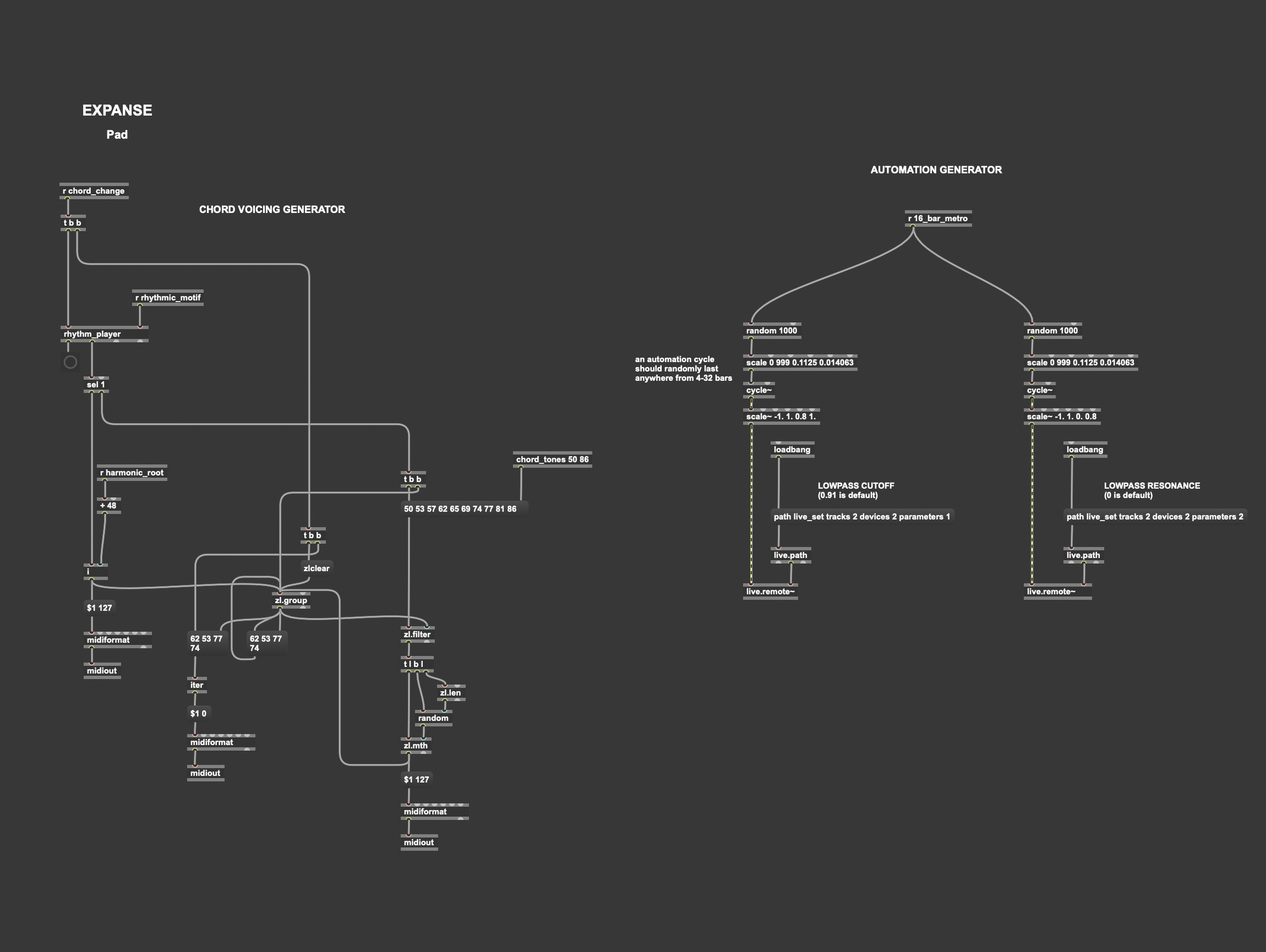

The harmony is primarily defined by the pad, which plays the rhythmic motif every time there is a new chord, starting with the root of the chord. Then, for each new note in the motif, it randomly selects from the root, third, or fifth, across several octaves in its range, holding every note until the next chord.

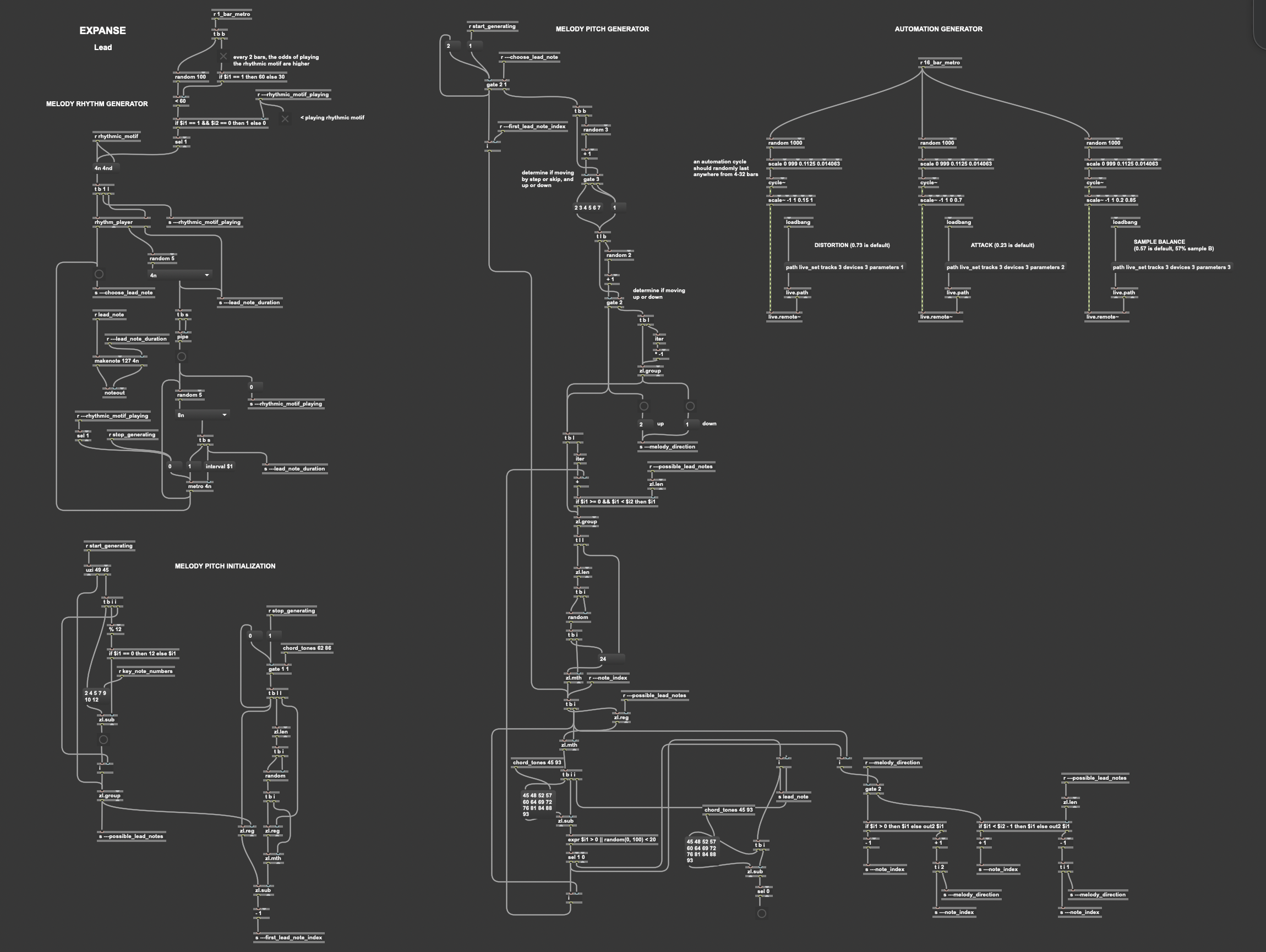

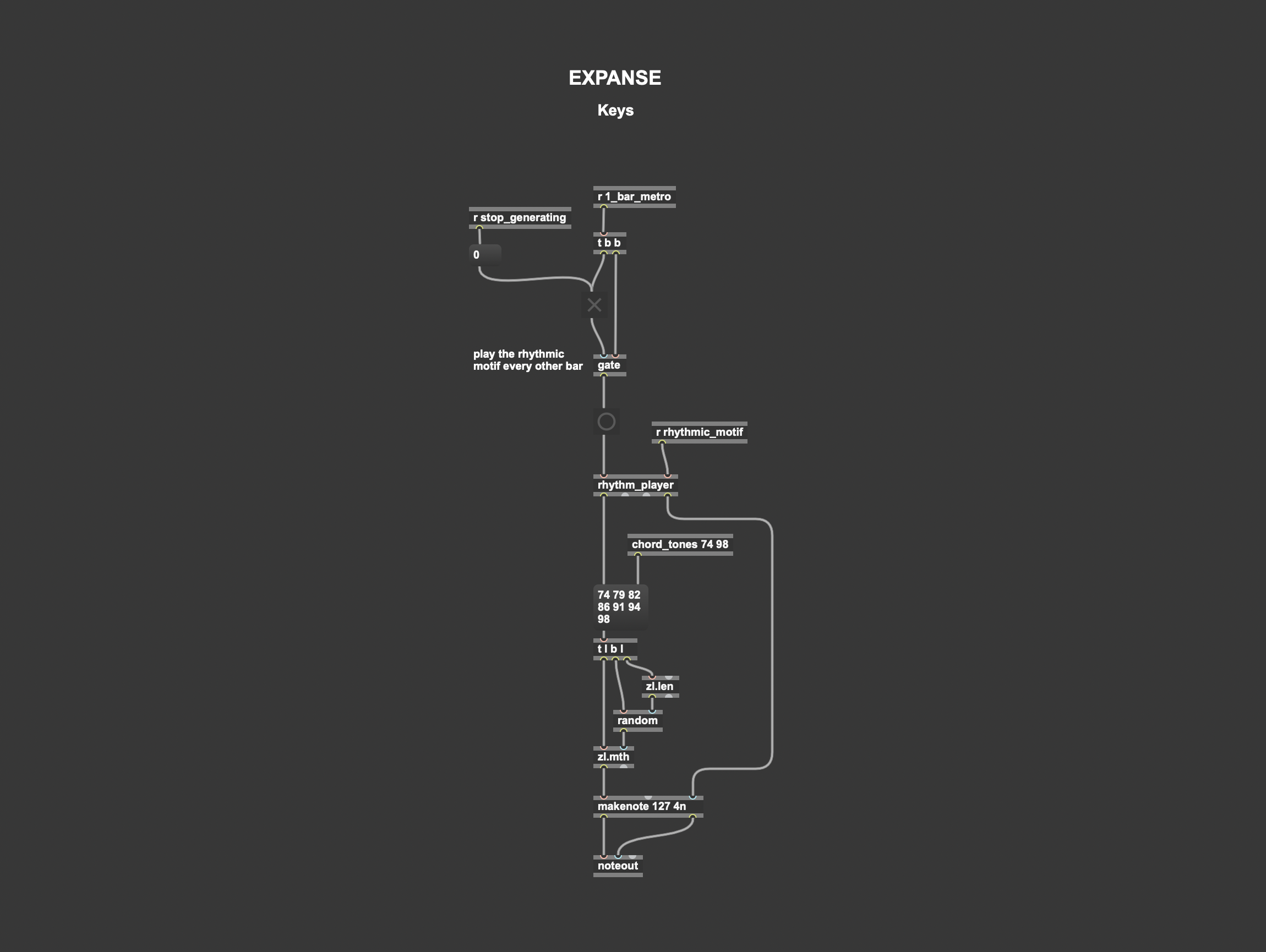

The call-and-response is further defined by when instruments decide to play this rhythmic motif. For example, the lead instrument (the sample-blending synth) has a chance to play it every measure, but this chance is higher on odd-number measures because the keys’ only job is to play the rhythmic motif every even-number measure (randomly selecting notes from the current chord). This often creates a call-and-response between these two instruments. When the lead instrument is not playing the rhythmic motif, it randomly selects note durations from the same set of five possibilities. To determine the lead melody’s shape, for every new note, it randomly decides whether to move up or down in the scale, by a skip or a step (a step is twice as likely), and the amount to skip by if applicable, incorporating a strong bias towards chord tones.

With the yangqin I wanted to reinforce the lead melody through an additional timbre. However, when I naively ran the same patch on both instruments, it generated multiple melodies in polyphony on both instruments, which sounded great on the yangqin but not-so-great on the more distorted and harmonically complex lead. I found a way to turn this bug into a feature, controlling it such that the lead only plays the original melody monophonically, while the yangqin plays it as well, along with some additional polyphonic textures (resulting from the melody generation algorithm running again in parallel).

Outside of Max, I used Ableton devices to randomly determine MIDI velocities of each incoming note, within a certain range and weighting specific to each instrument. In future generative compositions I would like to explore what more I can do outside of Max, focusing on Ableton’s versatile devices and effects. I would also be interested to expand this piece to incorporate more generative elements, such as generating long-term structure and percussion from Max rather than manually defining these elements.